· Brittany Ellich · reflection · 6 min read

AI Has an Image Problem

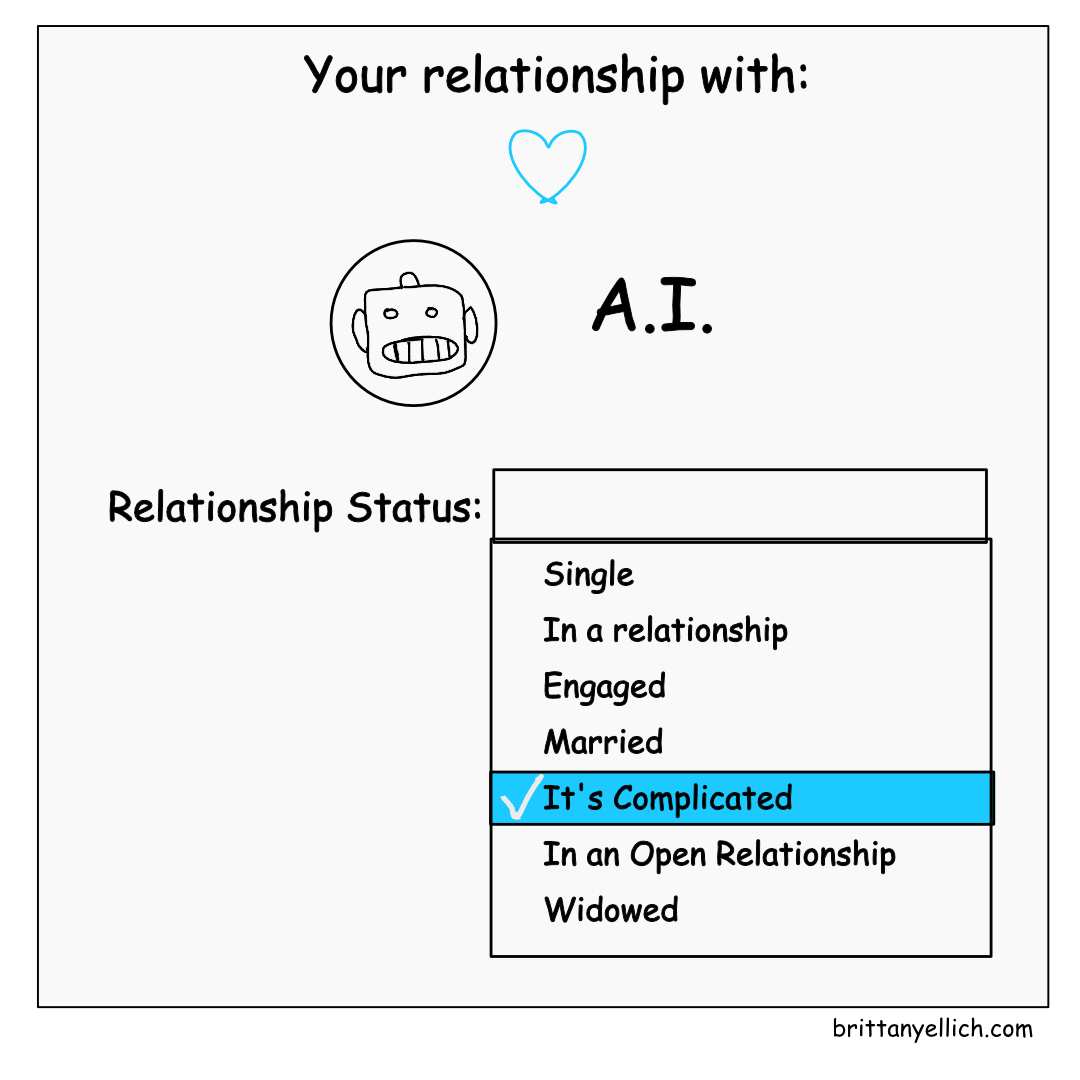

I spent 2025 going from skeptical to genuinely excited about AI tools. My non-tech friends and family spent 2025 learning to hate them. The AI industry has fumbled this introduction so badly that we've turned a useful set of tools into a cultural flashpoint - but the damage isn't irreversible.

I spent 2025 going from skeptical to genuinely excited about AI tools. My non-tech friends and family spent 2025 learning to hate them.

It’s not that I think everyone needs to love AI. I understand exactly why they don’t, and it was completely avoidable. The AI industry has fumbled this introduction so badly that we’ve turned a useful set of tools into a cultural flashpoint.

How we got here

The messaging around AI has been pretty bad. We sold it with apocalyptic predictions (“this may exterminate the human race by 2035”) while simultaneously mandating adoption (“use AI or else”). We promised it would take everyone’s jobs while we were simultaneously experiencing economic turbulence and widespread layoffs.

And it worked - we successfully made everyone hate AI. I’m not sure there is anything as polarizing right now as opinions on AI. They’re practically political beliefs, tied to the same identity space that makes it extremely hard to change anyone’s thoughts or have a productive conversation about it. If you mention AI in certain online spaces, folks act like it’s a personal attack.

Between the hype, the fear-mongering, and the very real controversies (gender and race bias, AI-generated art, whatever Grok is doing), we’ve created the perfect conditions for resentment. When people are worried about making rent, “your job will be automated away” isn’t exactly inspiring enthusiasm.

What actually using these tools taught me

Here’s the thing though: the more I actually use AI tools, the less I believe the sensational narratives. Both the utopian ones AND the dystopian ones.

Remember when computers were going to take all the jobs? When ATMs would eliminate bank tellers? It turns out inventing new tools doesn’t typically result in mass unemployment - it just changes what work looks like. And if my year on a team heavily using AI has taught me anything, it’s that there will always be more work to do. There is no “end of the backlog.” Doing work begets more work.

But getting to that realization required climbing a steep learning curve. And this is where the gap between my experience as someone interested in learning AI and the experience of the AI naysayers in my life make a lot of sense.

The tools are legitimately hard to use well

Code completions were easy to adopt because they fit existing patterns. Chat tools were easy because we were already Googling things. But agents? Agents require entirely new mental models. They require delegation skills many developers don’t have. They require tolerance for bad results while you figure out how to use them effectively.

Most people won’t stick around through that learning curve. If something gives you bad results repeatedly, you label it as a bad tool and move on. And then you tell everyone you know that AI tools are garbage.

They’re also wrong a lot. Which, honestly, so is Stack Overflow. But the difference is we know we are supposed to verify Stack Overflow answers. We need the same skepticism for AI outputs, but the hype made it sound like these tools were infallible.

And even once you get good at them, there are real costs. The context switching required to use agents effectively goes against everything we know about deep work. It took me months to develop a workflow that actually works - time-boxing my day between “asynchronous development” (delegating to agents) and “synchronous development” (deep focus work). That’s a lot of experimentation required for tools that were marketed as magical productivity enhancers.

Where they actually shine

But here’s why I’m still excited: when you push through that learning curve and find the right use cases, these tools are genuinely transformative.

They’re not great for precision-critical work. I wouldn’t use them on financial data or anywhere being wrong has serious consequences. But for building scripts to analyze that data? Perfect. For brainstorming different ways to solve a problem? Fantastic. For finally tackling that tech debt backlog? Incredible.

That last one is what really got me. While I’m sure my productivity on normal projects has increased, I’m not just using AI to continually crank out more features - I’m getting way more passion projects done. And instead of filing tech debt issues that’ll die in the backlog graveyard, I can immediately assign them to GitHub Copilot and see what it does with it.

I’m doing more refactoring than ever, and that’s resulting in more quality improvements to my codebase. That means better quality software. That’s the real value proposition, and nobody’s selling it that way because “ship features faster” sounds better in a pitch deck.

The path forward

So where does this leave us in 2026?

The good news: I think we’re finally reaching the point where honest conversations can happen. The hype cycle is slowing down, and the industry is accepting that AI won’t change the world as dramatically as predicted. While that has scary economic implications for companies that invested billions based on those predictions, it opens space for reality.

The bad news: the damage might already be done. Anti-AI sentiment will likely get worse before it gets better. We’ll need entirely new strategies to convince people these tools are worth trying, and now that anti-AI sentiment is tied to folks identities, it’s going to be really hard to get those folks bought in.

I’m cautiously optimistic that 2026 will be the year of practical honesty. I may be biased by the space I work in, but I think developer tools will lead the way in realistic messaging. We’ll get comfortable saying “it doesn’t work for X, but it’s great for Y.” Companies will stop mandating AI use and instead show people why they actually WANT to use it.

I also think we will finally accept that the fundamentals still matter. You can’t have effective AI users without effective developers first. It’s sort of like a calculator. You can’t give a 5-year-old a calculator and expect them to be able to do all math. I think that the same principle applies here, you have to do the work first without AI to understand how to build software before you use AI tools to make it go faster.

The hype ending means real work can begin. We’re moving from “AI will save/destroy us” to “AI is a tool, let’s get good at it.” We need honest conversations about limitations. We need to invest in fundamentals instead of assuming tools replace them. We need to repair the damage done by apocalyptic marketing and mandatory adoption policies.

So here’s what I’m committing to in 2026: radical honesty about where AI works and where it doesn’t. When I talk about these tools, I’m going to lead with the limitations, not bury them. I’m going to talk about the learning curve, not pretend it doesn’t exist. I’m going to show the actual work, not the highlight reel.

The industry won’t fix AI’s image problem with shinier marketing. We’ll fix it by being honest about what we’re actually experiencing - the good, the bad, and the “it took me three months to figure out a workflow that didn’t make my head explode.”

The work ahead isn’t technical - it’s cultural. And that might actually be harder than the technical challenges. But if enough of us commit to honest conversations, 2026 could be the year things start to shift.

0 Likes on Bluesky

Likes:

- Oh no, no likes, how sad! How about you add one?

Comments:

- Oh no, no comments, how sad! How about you add one?