· Brittany Ellich · tutorial · 11 min read

A Software Engineer's Guide to Agentic Software Development

I've cracked the code on breaking the eternal cycle - features win, tech debt piles up, codebase becomes 'legacy', and an eventual rewrite. Using coding agents at GitHub, I now merge multiple tech debt PRs weekly while still delivering features. Tickets open for months get closed. 'Too hard to change' code actually improves. This is the story of the workflow.

I’ve watched the same cycle at every company: product teams prioritize features over codebase improvements, tech debt piles up, engineers get frustrated, and the application becomes more brittle and difficult to work in over time. We all know we should tackle tech debt incrementally, alongside delivering features, but there’s never time.

What if I told you I’ve found a way to finally break this cycle?

I work for GitHub, one of the largest developer platforms, serving everyone from the largest enterprises to the smallest open source projects. Working at GitHub means I get to test developer tools before they’re widely available. My team and I have been using the GitHub Copilot coding agent for over a year now, and I think I’ve found a new method of completing work with it that means I no longer have to choose between delivering features and improving the codebase. I’ve been referring to this as Agentic Software Development.

I use GitHub Copilot through work, but this workflow likely applies to other coding agent tools as well. I genuinely think coding agents are a game changer for building and maintaining complex software systems. And this is the story of how I do it.

The Opportunity

I believe we’re on the precipice of a change in how software is written. Like the introduction of the Manifesto for Agile Software Development or Test-Driven Development, we’ve reached a point where a new approach may forever change how we write software: Agentic Software Development.

This is not vibe coding - describing what you want to an LLM and blindly accepting the response. Vibe coding is a fine prototyping and brainstorming tool, but for production systems, it’s not practical or safe.

Agentic Software Development is the practice of systematically delegating well-scoped, clearly-defined tasks to AI coding agents while you focus on development work that requires more human judgment.

There’s a specific type of task ideal for this workflow: small, well-scoped tasks that often include the “how” for completion as well as the “what”. This means Agentic Software Development isn’t great for exploration or ideation. But it’s excellent for tech debt.

Tech debt is my catch-all term for “anything that will improve the reliability, scalability, or maintainability of the software application but isn’t a new feature.” These tasks range from refactoring hard-to-read code to decoupling parts of your API layer to renaming functions for consistency. They’re tasks developers lovingly craft to invest in long-term maintainability and scalability - tasks that might not be directly visible as a benefit to users as a new feature, but will definitely benefit them with a more stable and reliable application.

Tech debt tasks are traditionally hard to quantify, and hard-to-quantify tasks often don’t get prioritized. That makes sense from the business side: features make customers happy, happy customers mean more money, and more money is good. It’s hard to argue with that logic.

But as a software engineer with ownership and pride in the applications I maintain, this cycle is painful. I’ve seen it repeatedly throughout my career. Problems build up, resentment grows, the application gets labeled “legacy.” Parts of the codebase become walled off as “too difficult to change” and rarely evolve. Eventually these issues snowball into a complete rewrite to “do it right this time” - spending months or years rebuilding the same exact application, which rarely turn out better maintained or on time.

We all know we should tackle tech debt alongside feature work, a little at a time. But there’s never enough time! That’s where Agentic Software Development comes in. Instead of letting tech debt pile up forever, I propose that software engineers modify their workflow to complete those additional tasks alongside delivering features. This isn’t about delivering features faster (although it eventually does as the codebase becomes easier to work in). It’s about making software systems genuinely better using coding agents while continuing to deliver business value.

We haven’t been in a situation before where we could easily and cheaply delegate numerous tasks. If I asked another engineer to take on ten small tasks and return in an hour with proposed solutions, they’d likely quit. But a coding agent can handle all of them, create complete pull requests, and ensure CI passes, all without a single complaint. Coding agents are uniquely suited for this: they operate tirelessly, can complete as many tasks as you assign, and cost basically nothing. As an example, a single GitHub Copilot coding agent task costs one premium request, and most accounts include 300-1500 premium requests monthly. Even if you exhaust those, additional requests are only $0.04 each. Contrast that with 2-8 development hours per task for each of those 10 tasks… the value is obvious.

The Process: If You Know It → Write It → Ship It

Agentic Software Development boils down to three steps: If you know it → Write it → Ship it. Here’s what that means in practice.

If You Know It: Triage What to Delegate

The most important part of this workflow is triaging issues: figuring out what’s good for a coding agent versus what you should do yourself. My guideline:

Anything I know exactly how to do goes to the coding agent. Anything requiring exploratory work or investigation is something I do.

This comes down to understanding what I’m good at versus what a coding agent is good at. I’m really good at navigating ambiguity and reading between the lines on requirements. Sometimes the thing we’re asking for isn’t actually what will solve the problem - it takes digging to address the underlying issue.

Coding agents aren’t great at navigating ambiguity yet. However, they excel at other things. They’re great at tasks I’d find boring or repetitive: updating naming conventions across the application or handling the update for breaking changes from a new dependency version.

To clarify this delineation, here are examples:

Good candidates for agent delegation:

- “Rename all instances of getUserData() to fetchUserProfile() and update tests”

- “Update all API endpoints to use the new error response format from [link]”

- “Add TypeScript types to all functions in utils/ directory”

Bad candidates:

- “Users report slow load times, investigate and fix”

- “Improve dashboard performance”

- “Fix bug causing test failures” (when you don’t know the root cause)

Write It: Craft Clear Specifications

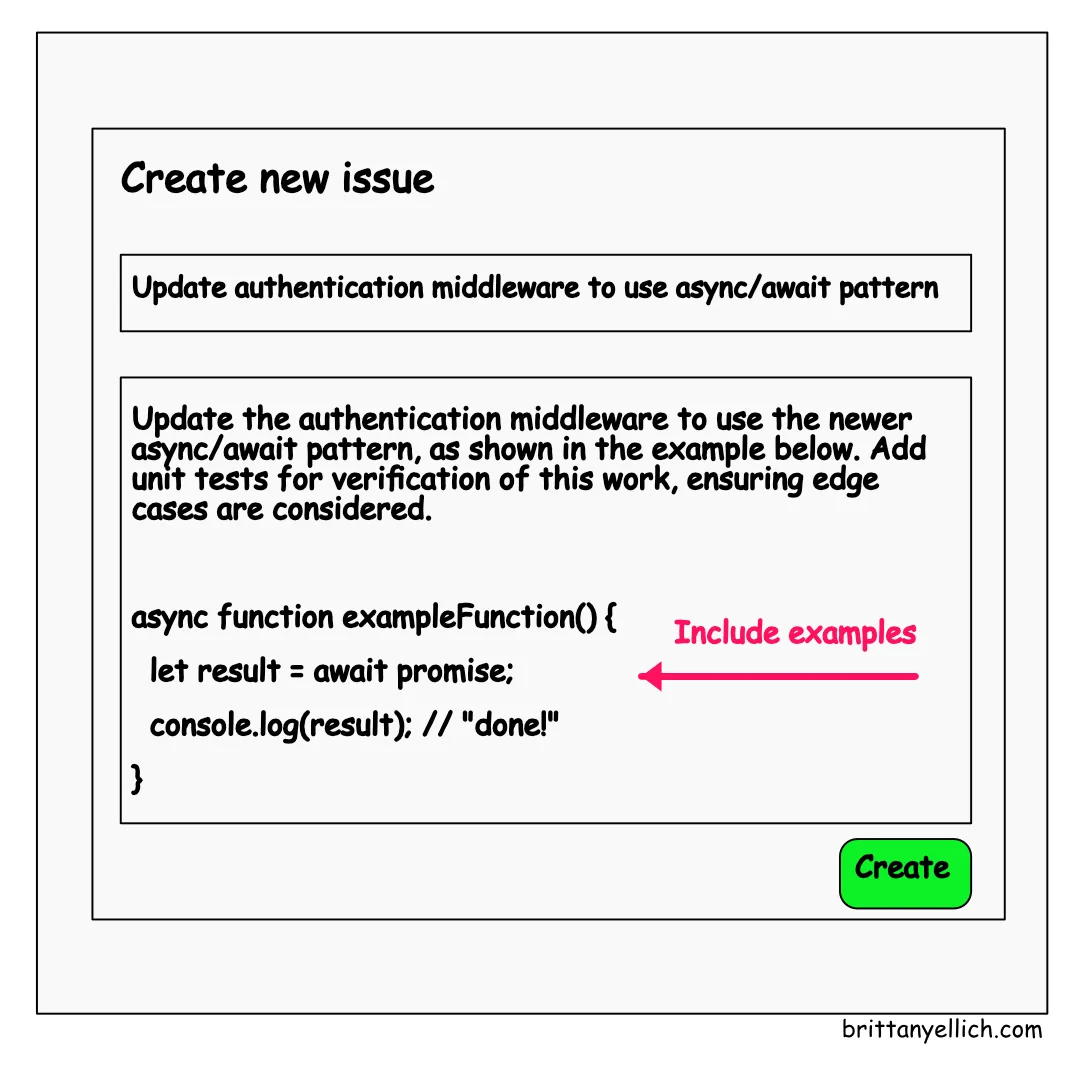

Once you’ve identified a task to delegate, you need to write it clearly enough that the agent can execute it. Sometimes this means breaking a larger task into smaller sub-tasks to create better-scoped pull requests that are easier to understand and review. My guideline:

The task should be small and well-defined enough that someone brand new to the codebase could complete it without additional context beyond what’s in the issue.

This often means adding more to the issue description than I would for a teammate familiar with our codebase: more documentation, pointers to relevant code, or links to similar past PRs.

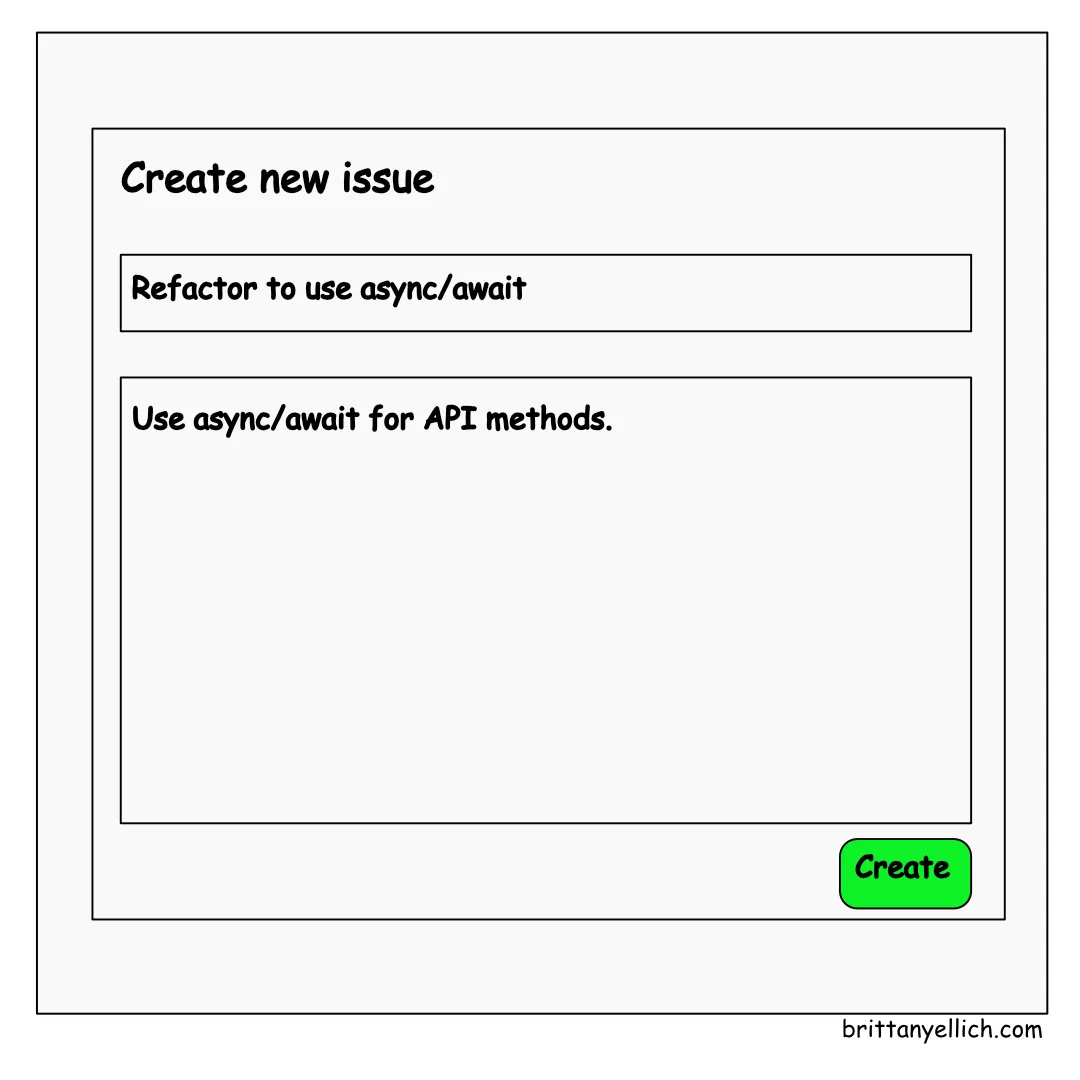

A (not great) issue for a teammate:

A (much better) issue for a coding agent:

I have what might be an unpopular opinion among AI enthusiasts: I don’t care much about testing different models to find the best one for each workflow. The GitHub Copilot coding agent abstracts that away by choosing the best model for the job, and honestly, if given the choice between manual selection or automatic, I’d nearly always choose automatic. I don’t want to fiddle with the tools, I just want to get stuff done.

Much has been said about the art of picking the right model and crafting the right prompt. But after a bunch of trial and error, the “person brand new to the codebase” guideline has been all I’ve needed to get good results.

Ship It: Validate and Review

Once you delegate the task to your coding agent, it does the execution work. But you’re not done - this is where your judgment comes in. Getting these tasks shipped efficiently requires a few developer experience things that weren’t previously as critical. Simon Willison’s excellent Vibe Engineering article covers required engineering practices more comprehensively, but these are what I’ve optimized for:

Preview Environments

A good preview environment - cheap, quick to spin up - is critical. If you have to set up an entire development environment to validate changes, you defeat the purpose of handing the task off to a coding agent. You want to quickly validate that changes solve the problem and don’t break anything else.

Code Review

Code review is the most human-in-the-loop part of this process beyond writing the specification. A code review process that allows timely feedback is crucial to actually moving faster with agents. Remember: you’re reviewing untested code, so you need to be more thorough than with a teammate’s PR.

What to expect when you start

Agentic Software Development is a very new skillset, although it’s based on familiar engineering skills: defining and refining tasks, reviewing code. Adopting this skillset doesn’t come easily. It takes work to modify how you complete work to include this method, and probably a few less-than-stellar results for your first few coding agent created PRs. But I argue it’s worth it to keep trying.

The sooner you adopt this workflow, the sooner your application and team benefit. Applications might not feel “well-maintained” for a while - I’m just now feeling like I’ve made a dent in our sizable backlog of tech debt and it has been several months of Agentic Software Development. But I now operate with this workflow regularly and see how much more of our backlog gets completed, and how much easier it is to add new features. It’s motivating.

There’s another reason to invest now: I’m not an economic expert, but AI tools will likely be more expensive in the future. This is probably as cheap as they’ll ever be - might as well learn and experiment now while it costs the least amount it’s likely to cost.

That said, changing your workflow isn’t easy. Any change to how you work is uncomfortable at first. This requires context switching above the normal level when working on a single task, and experimenting to figure out what works for you.

I rank the cognitive complexity of Agentic tasks somewhere between “reviewing a peer’s code” and “completing a task myself.” I need to think more about work the coding agent completed than I would for a teammate’s PR, because no human has touched or tested it yet. Given that, I can typically handle fewer Agentic tasks than peer PR reviews in a day. That means I can maintain context on 3-4 Agentic tasks at a time before context switching becomes too expensive and the gains get overshadowed by the cost.

Agentic tasks are also more comfortable when you’re very familiar with the codebase and experienced with this level of context switching. To reduce cognitive load, I typically maintain a single task I’m working on without the coding agent (usually a larger, more exploratory task an agent couldn’t easily tackle), and break my day into focused work on that task and chunks of triaging, reviewing, and merging Agentic tasks.

What does success look like? For me, it’s merging 4-6 tech debt PRs in a week while still delivering my feature work. It’s closing tickets that have been open for months. It’s the satisfaction of seeing “This is too hard to change” sections of the codebase actually get better.

The Time is Now

Here’s the thing about paradigm shifts: the people who adopt them early get a disproportionate advantage. When TDD was new, early adopters built better software while everyone else debated whether it was worth the overhead. When Agile was emerging, early teams shipped faster while others clung to waterfall.

Agentic Software Development is at that inflection point right now.

You can wait until everyone’s doing this and you’re playing catch-up, or you can start building the muscle memory now. You can keep watching your tech debt backlog grow while chasing features, or you can start systematically chipping away at it alongside your regular work.

The barrier to entry has never been lower. Most of you already have access to some kind of coding agent. The cost is negligible. The learning curve is steep but short.

So here’s my challenge: If you know it → Write it → Ship it.

Before you close this tab, go find one tech debt item in your backlog. Something you know exactly how to fix. Something that’s been sitting there for months because there’s “never time.”

If you know it - you can explain the exact solution - then write it with a specification detailed enough for someone brand new to your codebase. Delegate it to your coding agent and ship it after validating and reviewing.

That’s it. That’s the first step. And finding a task, adding details, and assigning to a coding agent like GitHub Copilot probably takes less time than you spent reading this blog post.

The software you maintain deserves better than being labeled “legacy.” Your future self - the one who won’t have to live through another frustrating software rewrite project - will thank you.

If you give it a shot, please let me know! Add a comment and share what works (and what doesn’t) as we navigate this new world of Agentic Software Development together!

0 Likes on Bluesky

Likes:

- Oh no, no likes, how sad! How about you add one?

Comments:

- Oh no, no comments, how sad! How about you add one?